What is Variational Inference 🌱

- Firstly, the word “variational” is a fancy word for optimization-based formulas

The Challenge

Direct inference on our distribution can be intractable

- We can approximate inference on by projecting it to a family of distributions and perform inference on this new distribution family

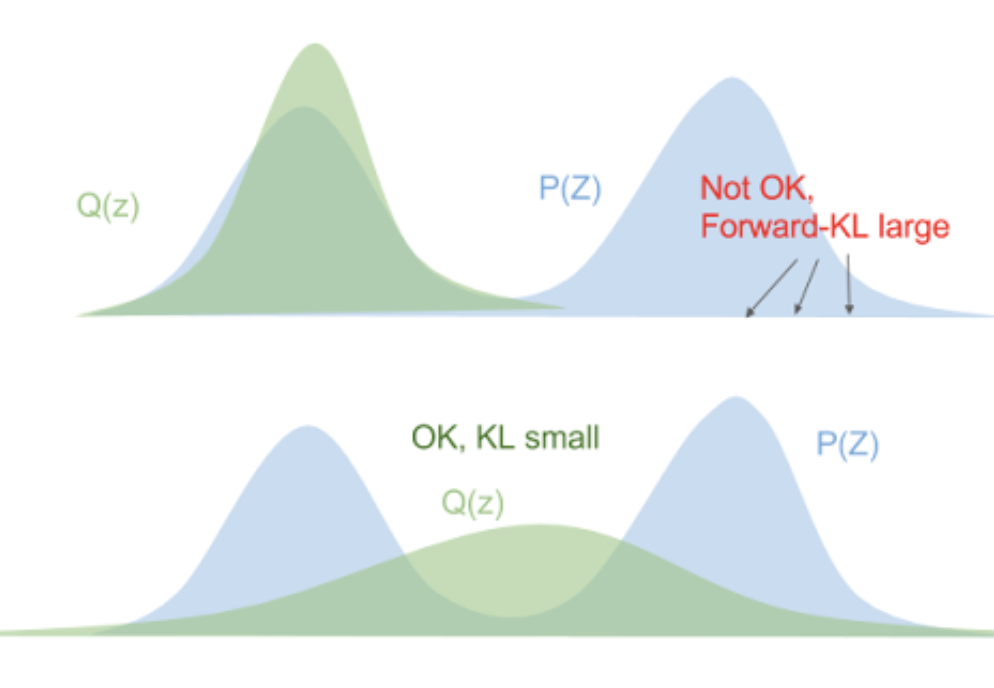

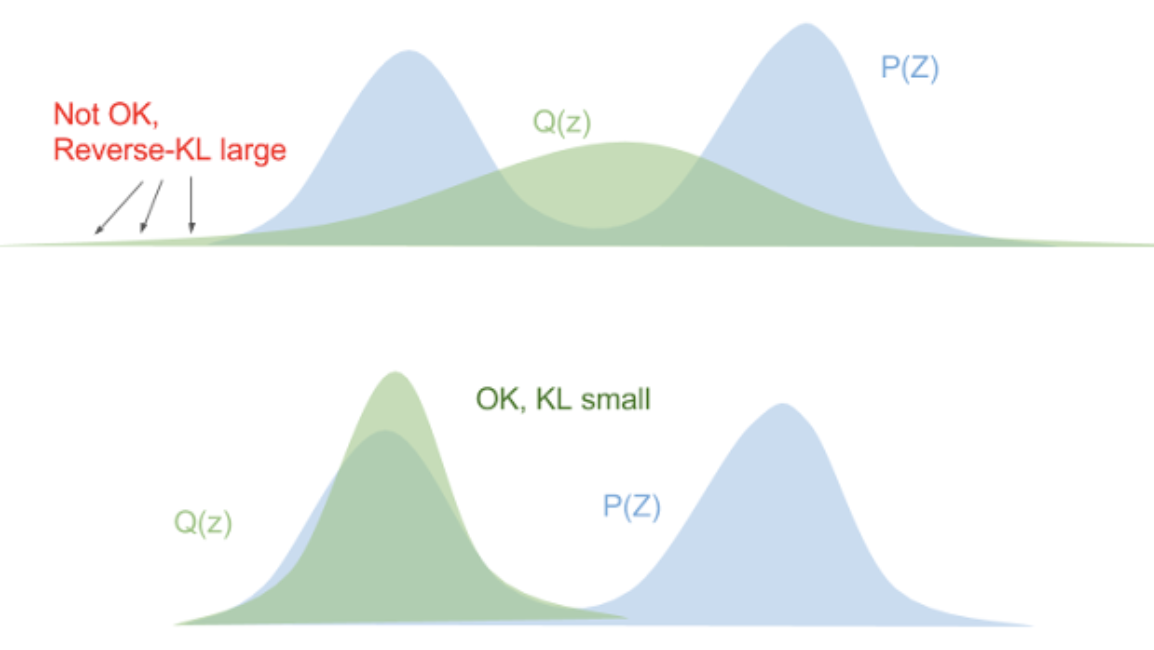

- We select the projection that minimizes the KL divergence

KL Divergence

|

|

- We try to minimize the reverse KL since it has the nice tendency to make our distribution match at least one of the modes of , which is the best we could hope for with the shape of our approximation.

- However, we can’t actually minimize this directly since it depends on the intractable distribution so we actually maximize the evidence lower bound (ELBO) (check this link for the math)

Mean Field Approximation

We assume that the distribution partitions our variables of interest into independent parts:

Suppose that we are interested in finding the joint probability , then based on our assumption of the distribution :

Solving for the optimal yields that (check this link for the math):

where is the expectation across all variables except for .

Now the algorithm goes:

- For each use the above equation to minimize the overall KL divergence by updating holding all the others constant.

- Repeat until convergence

Notes mentioning this note

There are no notes linking to this note.