What is the Wake Sleep Algorithm 🌱

Hemholtz Machine

|

|

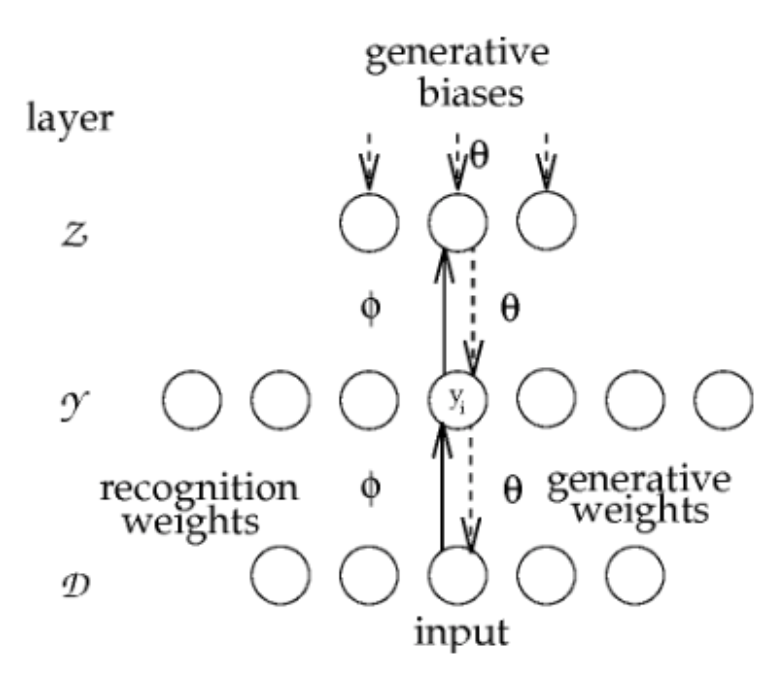

We have two networks:

- Recognition network with weights converts input data into latent representations used in successive hidden states .

- Generative network reconstructs data from the latent states using weights .

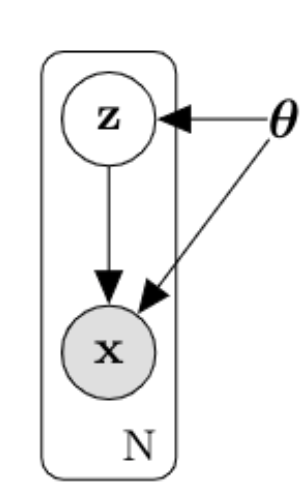

The Hemholtz machine tries to learn and such that

- is the variational distribution approximating the posterior

Wake Phase

- Feed into the recognition network to get and

- Draw samples

- For each feed into the generative network to get for the likelihood Bernoulli(x;

- Optimize for

- Simulate latent state by feeding input data to recognition network and maximize how well the generator’s probabilities for the hidden state fit the actual data.

Sleep Phase

- Draw

- Sample from the generative network

- Feed into the recognition network to get and

- Compute

- Optimize

- Simulate random data by following the generator. Then maximize the probability that the recognition network suggests the correct latent states given the simulated .

Notes mentioning this note

There are no notes linking to this note.