What is the Hidden Markov Model 🌱

The Hidden Markov Model (HMM) is a time series model that assumes the ground truth is made up of observed variables that we see and hidden variables that we never see but determine the values we observe. There are two main assumptions with the HMM:

- The first assumption means that the next hidden state is only dependent on the directly previous hidden state and none of the other past hidden states.

- The second assumption means that the observation at a certain time point is only dependent on the hidden state at that time point.

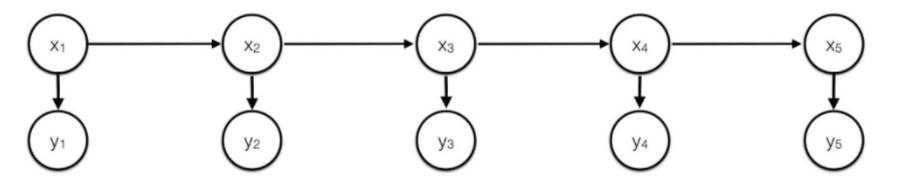

Here is a visualization of what the HMM looks like where are the hidden states and are the observed values.

There are 3 main problems we can solve with the HMM model, where is a matrix of transition probabilities like , is a matrix of emission probabilities like , is the sequence of observed values, and is the sequence of states. This model assumes that transition and emission probabilities are constant over time (but this can be generalized by having as a tensor).

- Compute

- Compute

- Compute $A^,B^ = \text{argmax}_{A,B} P(O \mid A,B)$

Notes mentioning this note

There are no notes linking to this note.