What is the Dirichlet Process 🌱

A Dirichlet distribution is a distribution over probability vectors based on some parameters. What if we want those parameters to be random?

- A Dirichlet Process is basically a distribution over distributions of parameters

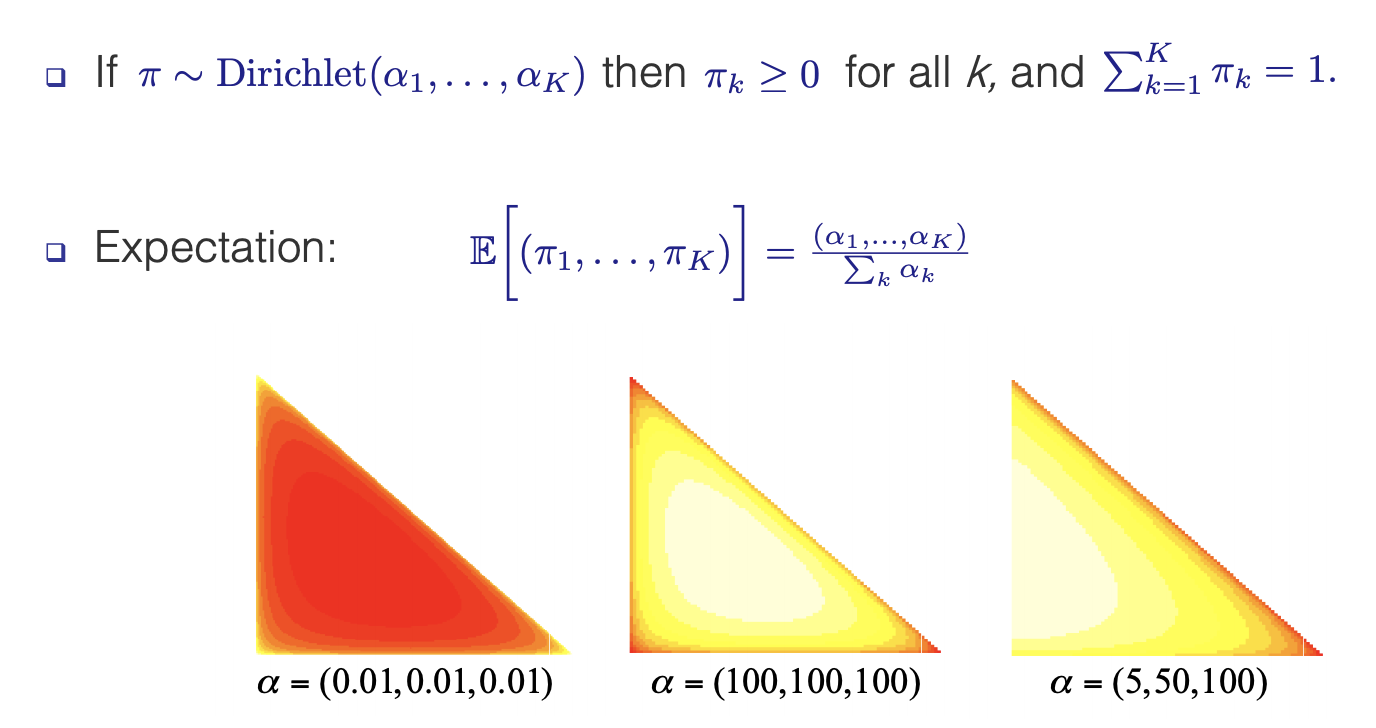

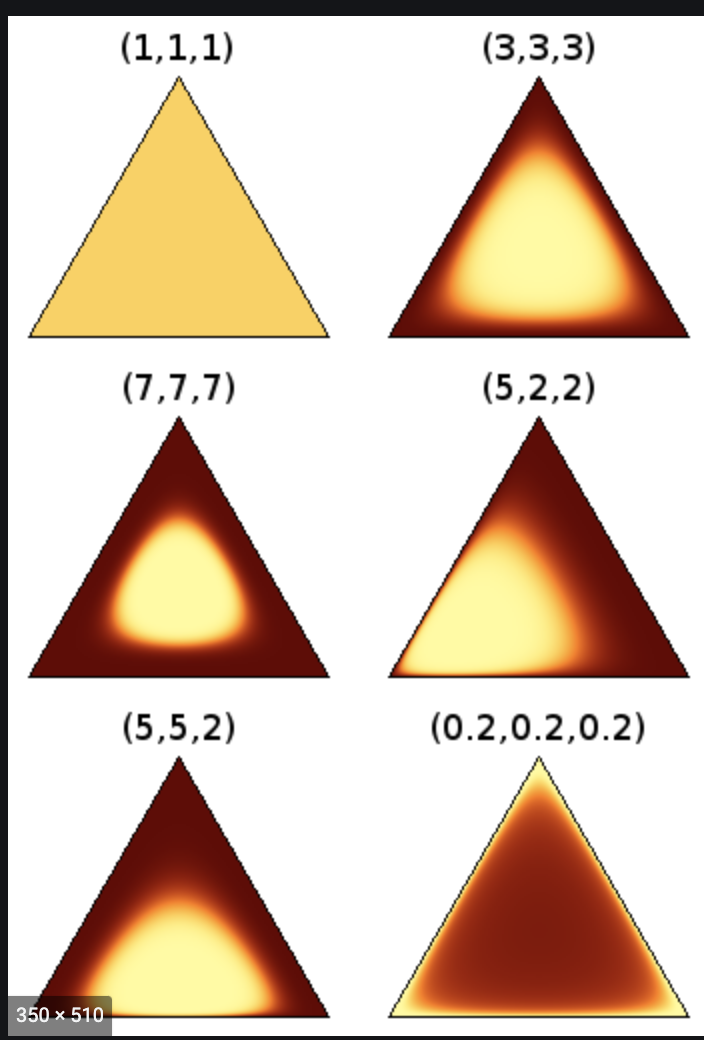

Dirichlet Distribution

- A Dirichlet distribution is often used to probabilistically categorize events among several categories. Suppose that weather events take a Dirichlet distribution. We might then think that tomorrow’s weather has probability of being sunny equal to 0.25, probability of rain equal to 0.5, and probability of snow equal to 0.25. Collecting these values in a vector creates a vector of probabilities.

- The Dirichlet distribution is a distribution over positive vectors that sum to one (probabilities).

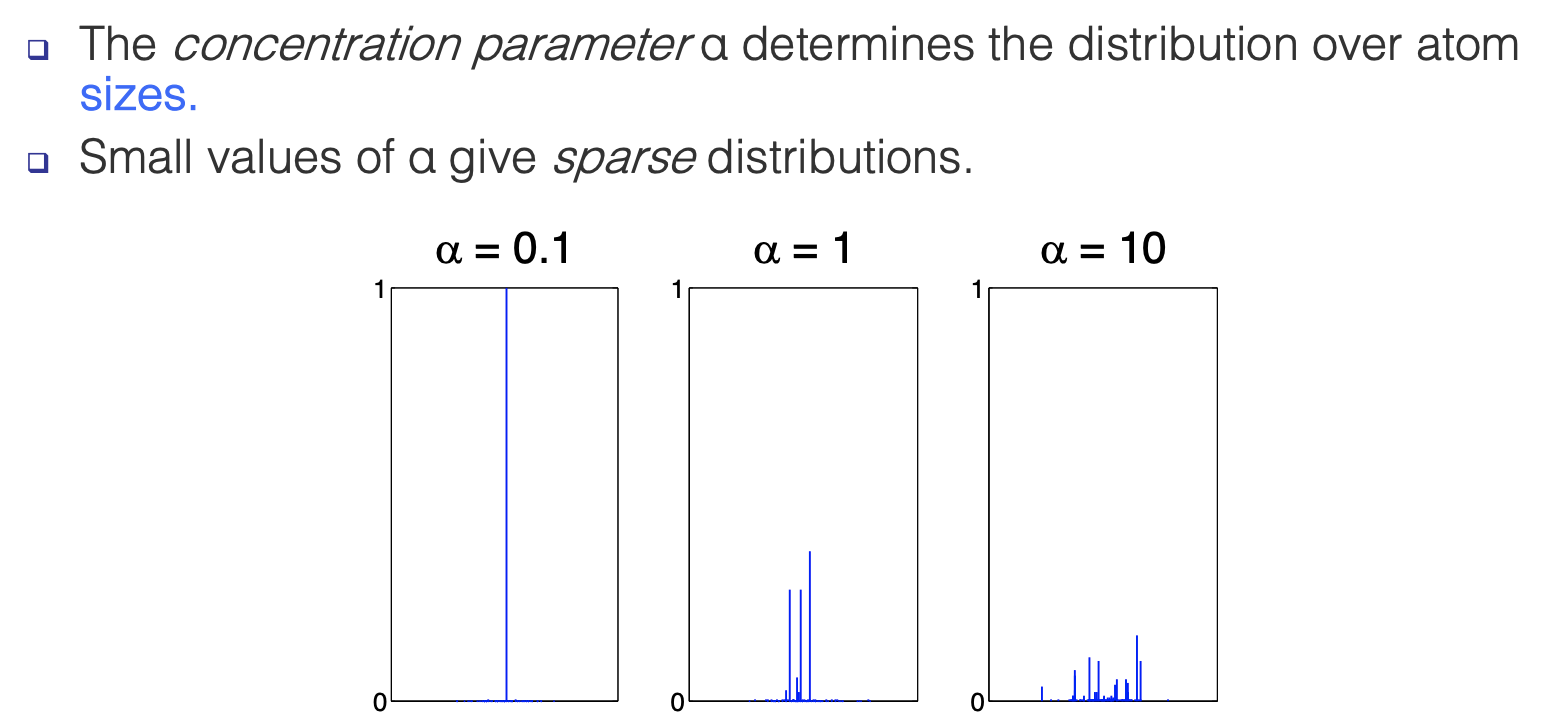

- Below shows what Dirichlet distributions with different parameters look like:

|

|

|---|---|

Relation to Beta Distribution

- A beta distribution is often used to describe a distribution of probabilities of dichotomous events, so it’s restricted to the unit interval.

- For example, for a Bernoulli trial, there is only a parameter describing the probability of a “success.” Often we think of as being fixed, but if we are uncertain about the “true” value of , we could think about a distribution of all possible s, with a larger likelihood for those we consider more plausible, so perhaps , where concentrates more of the mass near 1 and concentrates more of the mass near 0.

- Extending the beta distribution into three or more categories gives us the Dirichlet distribution

Defining the Dirichlet Process

Definition 1

Let be a distribution on some space (e.g. a Gaussian distribution on the real line) with the following known:

Then

where is the indicator which is zero everywhere except for $

Definition 2

A Dirichlet process is the unique distribution over probability distributions on some space , such that for any finite partition of . So if then:

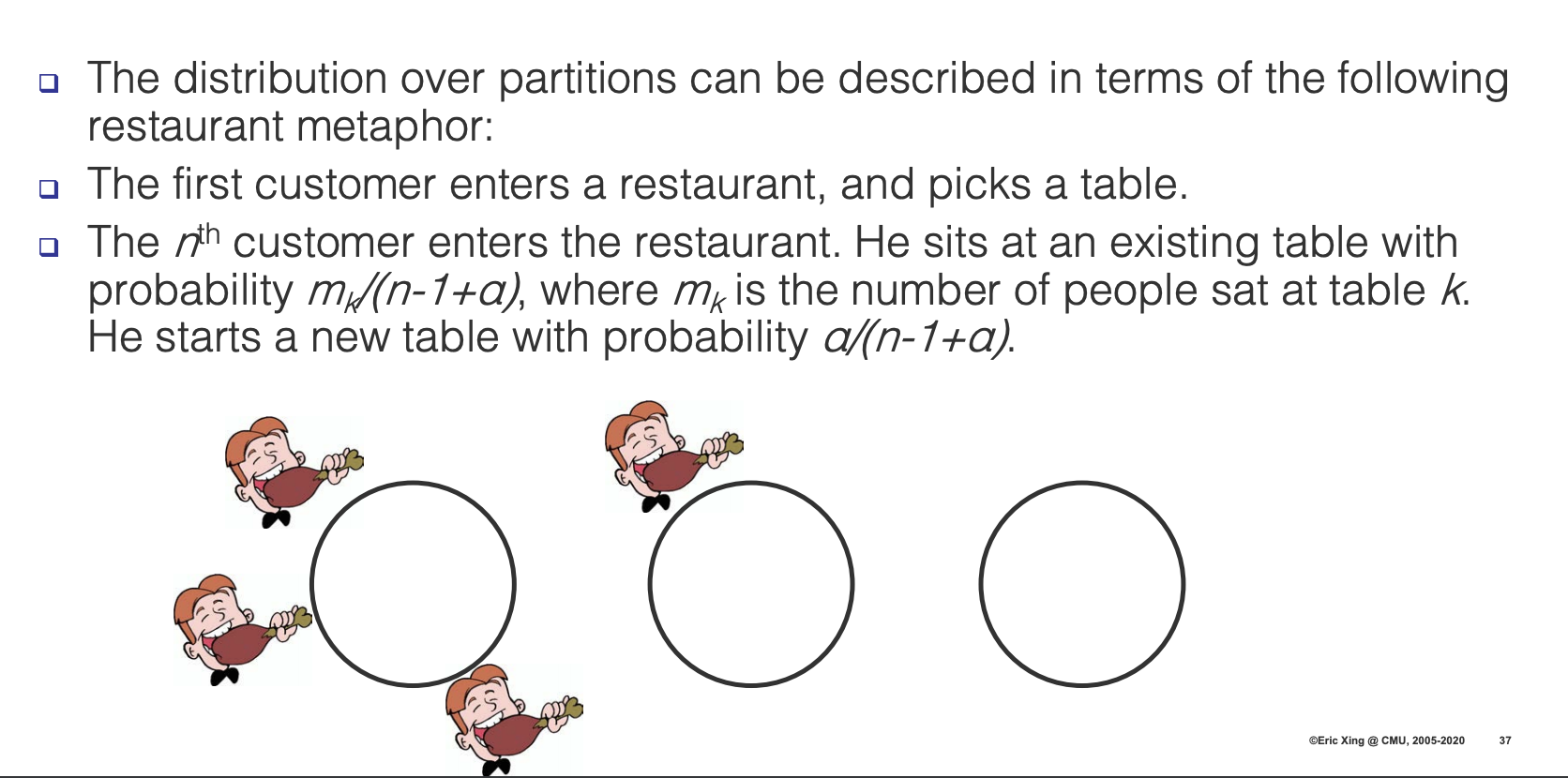

Relating to the Chinese Restaurant Process

- Each partition represents a table at the restaurant

- is the probability mass of customers on table (e.g. )

- is a draw from the base measure for the corresponding table . This could act as the label for a table.

This could also be explained with the Polya Urn Process which is basically identical except that each represents a color, with as a base measure from the color and as the probability mass of that specific color

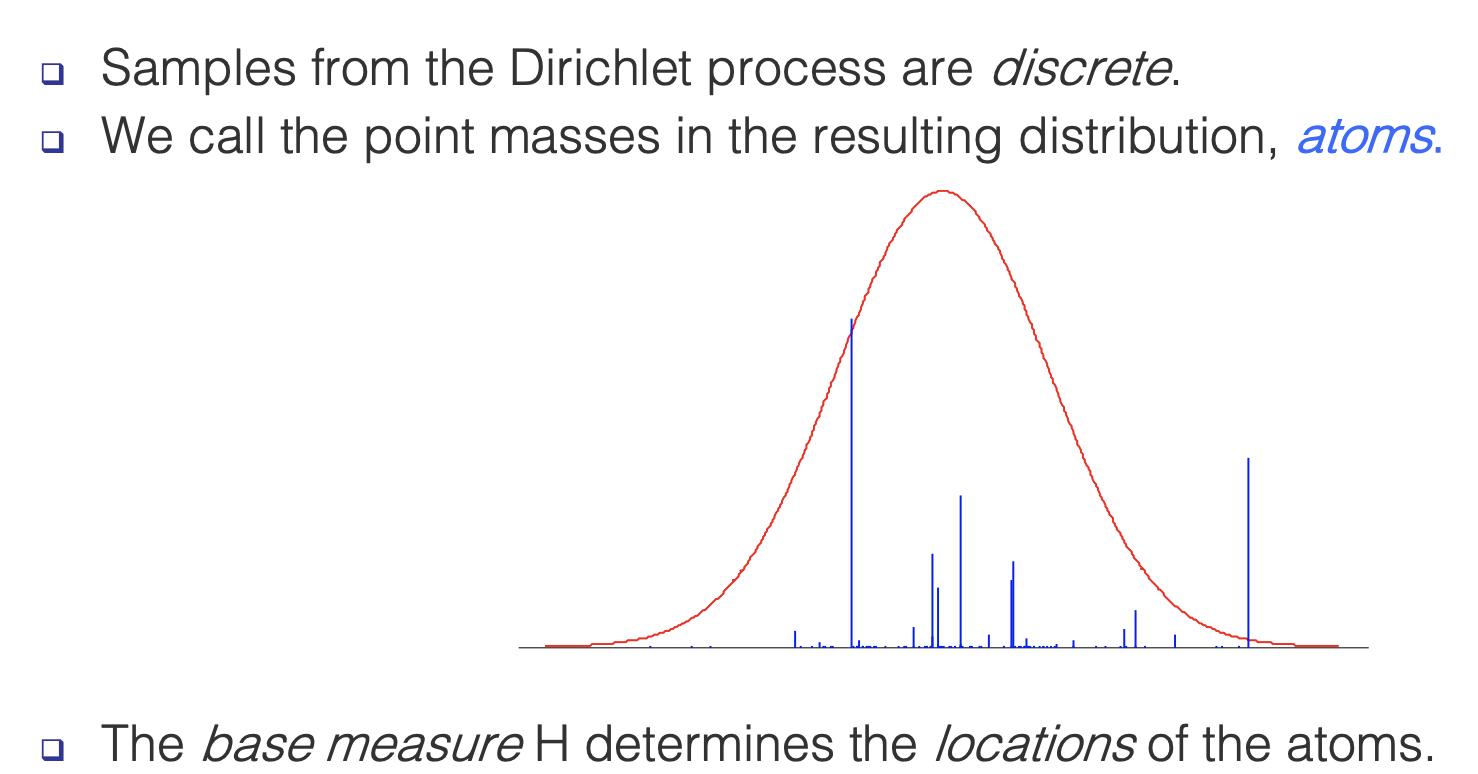

Sampling from the DP

|

|

|---|---|

| The base measure does not influence the probability of a point joining that atom/class, it only influences the locations of the atoms (the value of the class) as shown in the graph above. | These properties of alpha are true because this means that a new value is less likely to take on a new class value with a small alpha (see predictive distribution below) |

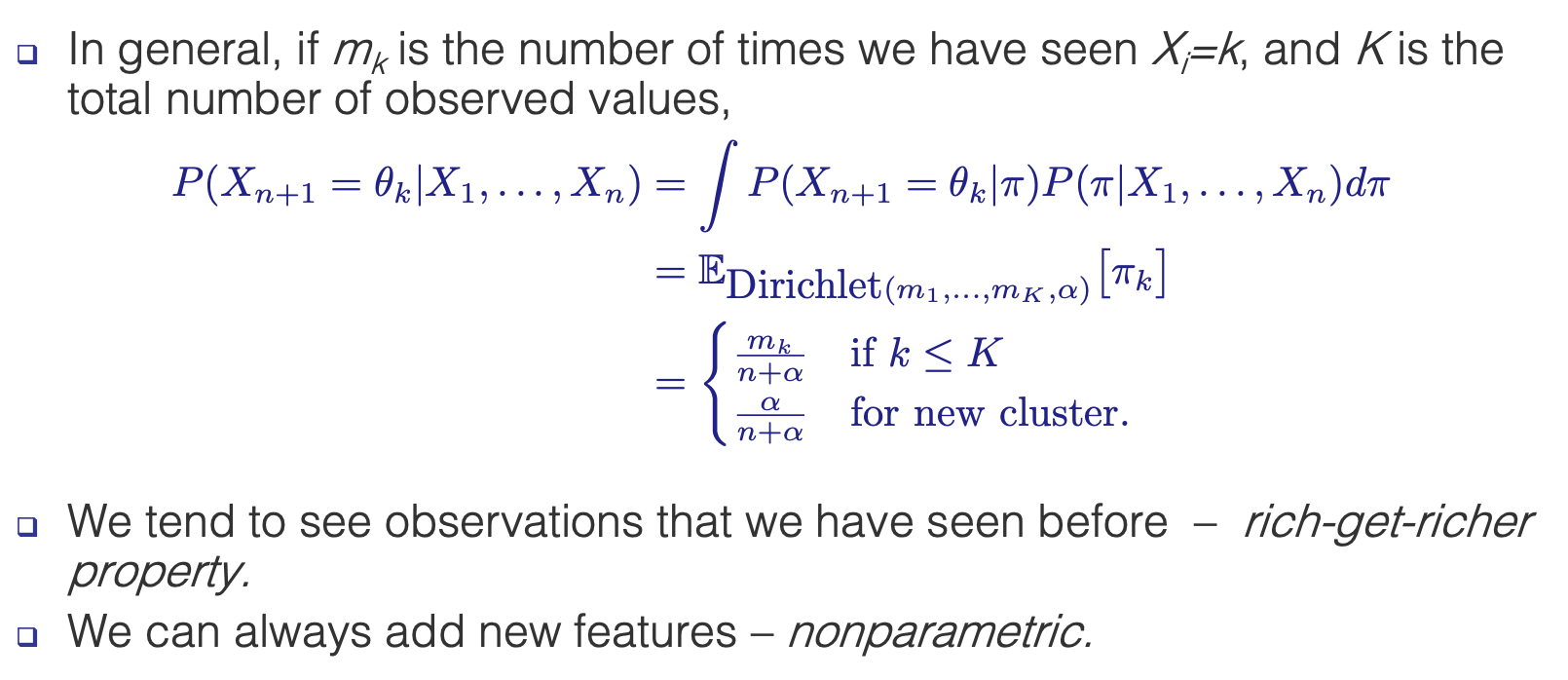

Predictive Distribution

A new data point can either join an existing cluster, or start a new cluster. What is the predictive distribution for a new data point?

Hierarchical Dirichlet Process/Chinese restaurant franchise

Notes mentioning this note

There are no notes linking to this note.